Our company have been providing SEO professional services to customers since the birth of the internet. While our approaches have developed over time, our overall aim hasn't and that's to get our customers web pages to rank on the 1st page for appropriate keywords along with only using ethical and long-lasting techniques.

Tuesday, July 31, 2018

Homes would not have been destroyed if Surrey had enough firefighters, union claims

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/homes-would-not-been-destroyed-14968186

Surrey in First World War: August 1918 puts county's regiments on the front foot

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/surrey-first-world-war-august-14916109

Plans for a dedicated dinosaur museum in Surrey a 'genuine possibility'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/plans-dedicated-dinosaur-museum-surrey-14956571

Famous Ivy restaurant group opens first Hampshire brasserie - take a peek inside

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/food-drink-news/famous-ivy-restaurant-group-opens-14968318

Prezzo in Reigate becomes the latest high street casualty

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/prezzo-reigate-becomes-latest-high-14972533

Body of man found in River Wey in Ripley following specialist search

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/body-man-found-river-wey-14975467

16 ways to make the most of the Surrey sunshine on your lunch break

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/whats-on-news/17-ways-make-most-sunshine-13278346

Council worker who appeared in BBC's Food Inspectors named as employee who died in Reigate

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/council-worker-who-appeared-bbcs-14975088

Guildford councillors back town's legendary Star Inn music venue against noise complaint

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/guildford-councillors-back-towns-legendary-14972856

Plans to demolish Walton's Birds Eye building approved to meet Elmbridge's 'extraordinary' housing need

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/plans-demolish-waltons-birds-eye-14971609

Footage as fire takes hold of car in Albury metres away from woodland

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/footage-fire-takes-hold-car-14969106

Wanted man arrested in connection with robbery of Woking pensioner who later died in hospital

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/wanted-man-arrested-connection-robbery-14973453

Cash machine 'removed from wall' at Guildford College and money stolen

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/cash-machine-removed-wall-guildford-14970840

Burglar steals former Aldershot Garrison commander's medals earned over 40 years of service

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/burglar-steals-former-aldershot-garrison-14972114

Woman airlifted to hospital with 'serious injuries' after being hit by car in Burpham

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/woman-airlifted-hospital-serious-injuries-14972145

School pays tribute to Oxted snowboard champion Ellie Soutter after tragic death on 18th birthday

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/school-pays-tribute-oxted-snowboard-14971565

Prince Harry and Meghan Markle to attend wedding in Surrey this weekend

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/prince-harry-meghan-markle-attend-14971630

Tributes paid to RideLondon cyclist Nigel Buchan-Swanson who died during 100-mile ride

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/tributes-paid-ridelondon-cyclist-nigel-14971760

Heatwave returns as weekend weather temperatures to rival India and north Africa

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/heatwave-returns-weekend-weather-temperatures-14971605

Better Than Basics: Custom-Tailoring Your SEO Approach

Posted by Laura.Lippay

Just like people, websites come in all shapes and sizes. They’re different ages, with different backgrounds, histories, motivations, and resources at hand. So when it comes to approaching SEO for a site, one-size-fits-all best practices are typically not the most effective way to go about it (also, you’re better than that).

An analogy might be if you were a fitness coach. You have three clients. One is a 105lb high school kid who wants to beef up a little. One is a 65-year-old librarian who wants better heart health. One is a heavyweight lumberjack who’s working to be the world’s top springboard chopper. Would you consider giving each of them the same diet and workout routine? Probably not. You’re probably going to:

- Learn all you can about their current diet, health, and fitness situations.

- Come up with the best approach and the best tactics for each situation.

- Test your way into it and optimize, as you learn what works and what doesn’t.

In SEO, consider how your priorities might be different if you saw similar symptoms — let’s say problems ranking anything on the first page — for:

- New sites vs existing sites

- New content vs older content

- Enterprise vs small biz

- Local vs global

- Type of market — for example, a news site, e-commerce site, photo pinning, or a parenting community

A new site might need more sweat equity or have previous domain spam issues, while an older site might have years of technical mess to clean up. New content may need the right promotional touch while old content might just simply be stale. The approach for enterprise is often, at its core, about getting different parts of the organization to work together on things they don’t normally do, while the approach for small biz is usually more scrappy and entrepreneurial.

With the lack of trust in SEO today, people want to know if you can actually help them and how. Getting to know the client or project intimately and proposing custom solutions shows that you took the time to get to know the details and can suggest an effective way forward. And let’s not forget that your SEO game plan isn’t just important for the success of the client — it’s important for building your own successes, trust, and reputation in this niche industry.

How to customize an approach for a proposal

Do: Listen first

Begin by asking questions. Learn as much as you can about the situation at hand, the history, the competition, resources, budget, timeline, etc. Maybe even sleep on it and ask more questions before you provide a proposal for your approach.

Consider the fitness trainer analogy again. Now that you’ve asked questions, you know that the high school kid is already at the gym on a regular basis and is overeating junk food in his attempt to beef up. The librarian has been on a low-salt paleo diet since her heart attack a few years ago, and knows she knows she needs to exercise but refuses to set foot in a gym. The lumberjack is simply a couch potato.

Now that you know more, you can really tailor a proposed approach that might appeal to your potential client and allow you and the client to see how you might reach some initial successes.

Do: Understand business priorities.

What will fly? What won’t fly? What can we push for and what’s off the table? Even if you feel strongly about particular tactics, if you can’t shape your work within a client’s business priorities you may have no client at all.

Real-world example:

Site A wanted to see how well they could rank against their biggest content-heavy SERP competitors like Wikipedia but wanted to keep a sleek, content-light experience. Big-brand SEO vendors working for Site A pushed general, content-heavy SEO best practices. Because Site A wanted solutions that fit into their current workload along with a sleek, content-light experience, they pushed back.

The vendors couldn’t keep the client because they weren’t willing to get into the clients workload groove and go beyond general best practices. They didn’t listen to and work within the client’s specific business objectives.

Site A hired internal SEO resources and tested into an amount of content that they were comfortable with, in sync with technical optimization and promotional SEO tactics, and saw rankings slowly improve. Wikipedia and the other content-heavy sites are still sometimes outranking Site A, but Site A is now a stronger page one competitor, driving more traffic and leads, and can make the decision from here whether it’s worth it to continue to stay content-light or ramp up even more to get top 3 rankings more often.

The vendors weren’t necessarily incorrect in suggesting going content-heavy for the purpose of competitive ranking, but they weren’t willing to find the middle ground to test into light content first, and they lost a big brand client. At its current state, Site A could ramp up content even more, but gobs of text doesn’t fit the sleek brand image and it’s not proven that it would be worth the engineering maintenance costs for that particular site — a very practical, “not everything in SEO is most important all the time” approach.

Do: Find the momentum

It’s easiest to inject SEO where there’s already momentum into a business running full-speed ahead. Are there any opportunities to latch onto an effort that’s just getting underway? This may be more important than your typical best practice priorities.

Real-world example:

Brand X had 12–20 properties (websites) at any given time, but their small SEO team could only manage about 3 at a time. Therefore the SEO team had to occasionally assess which properties they would be working with. Properties were chosen based on:

- Which ones have the biggest need or opportunities?

- Which ones have resources that they’re willing to dedicate?

- Which ones are company priorities?

#2 was important. Without it, the idea that one of the properties might have the biggest search traffic opportunity didn’t matter if they had no resources to dedicate to implement the SEO team’s recommendations.

Similarly, in the first example above, the vendors weren’t able to go with the client’s workflow and lost the client. Make sure you’re able to identify which wheels are moving that you can take advantage of now, in order to get things done. There may be some tactics that will have higher impact, but if the client isn’t ready or willing to do them right now, you’re pushing a boulder uphill.

Do: Understand the competitive landscape

What is this site up against? What is the realistic chance they can compete? Knowing what the competitive landscape looks like, how will that influence your approach?

Real-world example:

Site B has a section of pages competing against old, strong, well-known, content-heavy, link-rich sites. Since it’s a new site section, almost everything needs to be done for Site B — technical optimization, building content, promotion, and generating links. However, the nature of this competitive landscape shows us that being first to publish might be important here. Site B’s competitors oftentimes have content out weeks if not months before the actual content brand owner (Site B). How? By staying on top of Site B’s press releases. The competitors created landing pages immediately after Site B put out a press release, while Site B didn’t have a landing page until the product actually launched. Once this was realized, being first to publish became an important factor. And because Site B is an enterprise site, and changing that process takes time internally, other technical and content optimization for the page templates happened concurrently, so that there was at least the minimal technical optimization and content on these pages by the time the process for first-publishing was shaped.

Site B is now generating product landing pages at the time of press release, with links to the landing pages in those press releases that are picked up by news outlets, giving Site B the first page and the first links, and this is generating more links than their top competitor in the first 7 days 80% of the time.

Site B didn’t audit the site and suggest tactics by simply checking off a list of technical optimizations prioritized by an SEO tool or ranking factors, but instead took a more calculated approach based on what’s happening in the competitive landscape, combined with the top prioritized technical and content optimizations. Optimizing the site itself without understanding the competitive landscape in this case would be leaving the competitors, who also have optimized sites with a lot of content, a leg up because they were cited (linked to) and picked up by Google first.

Do: Ask what has worked and hasn’t worked before

Asking this question can be very informative and help to drill down on areas that might be a more effective use of time. If the site has been around for a while, and especially if they already have an SEO working with them, try to find out what they’ve already done that has worked and that hasn’t worked to give you clues on what approaches might be successful or not..

General example:

Site C has hundreds, sometimes thousands of internal cross-links on their pages, very little unique text content, and doesn’t see as much movement for cross-linking projects as they do when adding unique text.

Site D knows from previous testing that generating more keyword-rich content on their landing pages hasn’t been as effective as implementing better cross-linking, especially since there is very little cross-linking now.

Therefore each of these sites should be prioritizing text and cross-linking tactics differently. Be sure to ask the client or potential client about previous tests or ranking successes and failures in order to learn what tactics may be more relevant for this site before you suggest and prioritize your own.

Do: Make sure you have data

Ask the client what they’re using to monitor performance. If they do not have the basics, suggest setting it up or fold that into your proposal as a first step. Define what data essentials you need to analyze the site by asking the client about their goals, walking through how to measure those goals with them, and then determining the tools and analytics setup you need. Those essentials might be something like:

- Webmaster tools set up. I like to have at least Google and Bing, so I can compare across search engines to help determine if a spike or a drop is happening in both search engines, which might indicate that the cause is from something happening with the site, or in just one search engine, which might indicate that the cause is algo-related.

- Organic search engine traffic. At the very least, you should be able to see organic search traffic by page type (ex: service pages versus product pages). At best, you can also filter by things like URL structure, country, date, referrers/source and be able to run regex queries for granularity.

- User testing & focus groups. Optional, but useful if it’s available & can help prioritization. Has the site gathered any insights from users that could be helpful in deciding on and prioritizing SEO tactics? For example, focus groups on one site showed us that people were more likely to convert if they could see a certain type of content that wouldn’t have necessarily been a priority for SEO otherwise. If they’re more likely to convert, they’re less likely to bounce back to search results, so adding that previously lower-priority content could have double advantages for the site: higher conversions and lower bounce rate back to SERPs.

Don’t: Make empty promises.

Put simply, please, SEOs, do not blanket promise anything. Hopeful promises leads to SEOs being called snake oil salesmen. This is a real problem for all of us, and you can help turn it around.

Clients and managers will try to squeeze you until you break and give them a number or a promised rank. Don’t do it. This is like a new judoka asking the coach to promise they’ll make it to the Olympics if they sign up for the program. The level of success depends on what the judoka puts into it, what her competition looks like, what is her tenacity for courage, endurance, competition, resistance… You promise, she signs up, says “Oh, this takes work so I’m only going to come to practice on Saturdays,” and everybody loses.

Goals are great. Promises are trouble. Good contracts are imperative.

Here are some examples:

- We will get you to page 1. No matter how successful you may have been in the past, every site, competitive landscape, and team behind the site is a different challenge. A promise of #1 rankings may be a selling point to get clients, but can you live up to it? What will happen to your reputation of not? This industry is small enough that word gets around when people are not doing right by their clients.

- Rehashing vague stats. I recently watched a well-known agency tell a room full of SEOs: “The search result will provide in-line answers for 47% of your customer queries”. Obviously this isn’t going to be true for every SEO in the room, since different types of queries have different SERPS, and the SERP UI constantly changes, but how many of the people in that room went back to their companies and their clients and told them that? What happens to those SEOs if that doesn’t prove true?

- We will increase traffic by n%. Remember, hopeful promises can lead to being called snake oil salesmen. If you can avoid performance promises, especially in the proposal process, by all means please do. Set well-informed goals rather than high-risk promises, and be conservative when you can. It always looks better to over-perform than to not reach a goal.

- You will definitely see improvement. Honestly, I wouldn’t even promise this unless you would *for real* bet your life on it. You may see plenty of opportunities for optimization but you can’t be sure they’ll implement anything, they’ll implement things correctly, implementations will not get overwritten, competitors won’t step it up or new ones rise, or that the optimization opportunities you see will even work on this site.

Don’t: Use the same proposal for every situation at hand.

If your proposal is so vague that it might actually seem to apply to any site, then you really should consider taking a deeper look at each situation at hand before you propose.

Would you want your doctor to prescribe the same thing for your (not yet known) pregnancy as the next person’s (not yet known) fungal blood infection, when you both just came in complaining of fatigue?

Do: Cover yourself in your contract

As a side note for consultants, this is a clause I include in my contract with clients for protection against being sued if clients aren’t happy with their results. It’s especially helpful for stubborn clients who don’t want to do the work and expect you to perform magic. Feel free to use it:

“Consultant makes no warranty, express, implied or statutory, with respect to the services provided hereunder, including without limitation any implied warranty of reliability, usefulness, merchantability, fitness for a particular purpose, noninfringement, or those arising from the course of performance, dealing, usage or trade. By signing this agreement, you acknowledge that Consultant neither owns nor governs the actions of any search engine or the Customer’s full implementations of recommendations provided by Consultant. You also acknowledge that due to non-responsibility over full implementations, fluctuations in the relative competitiveness of some search terms, recurring changes in search engine algorithms and other competitive factors, it is impossible to guarantee number one rankings or consistent top ten rankings, or any other specific search engines rankings, traffic or performance.”

Go get 'em!

The way you approach a new SEO client or project is critical to setting yourself up for success. And I believe we can all learn from each other’s experiences. Have you thought outside the SEO standards box to find success with any of your clients or projects? Please share in the comments!

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from The Moz Blog http://tracking.feedpress.it/link/9375/9890411

West Byfleet traffic light refurbishment to cause queues for five weeks

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/west-byfleet-traffic-light-refurbishment-14971549

Monday, July 30, 2018

Former RHS Wisley gardener died after brain disorder battle but helped others in final days

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/former-rhs-wisley-gardener-died-14956824

Only 14% of stop and searches by Surrey Police last year resulted in arrest, exclusive figures reveal

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/only-14-stop-searches-surrey-14948217

Rain makes Dorking stretch of RideLondon hazardous as hundreds turn out in support of riders

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/rain-makes-dorking-stretch-ridelondon-14967460

RideLondon volunteer suffers serious injuries after collision with cyclist in Leatherhead

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/ridelondon-volunteer-suffers-serious-injuries-14970338

Two teenagers missing from Guildford found 'safe and well'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/two-teenagers-missing-guildford-found-14970424

RMT confirms South Western Railway strike to go ahead as dispute over rail safety continues

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/rmt-confirms-south-western-railway-14969769

Aldi is now selling yoga clothes with prices starting from £5.99

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/shopping/aldi-now-selling-yoga-clothes-14954124

117 parking fines issued to drivers during Farnborough Airshow

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/117-parking-fines-issued-drivers-14950633

Group attempted to steal over £1,000 of alcohol from Caterham Waitrose

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/group-attempted-steal-over-1000-14957618

New 10,000 seat stadium will be 'jewel in the crown' says Woking council leader

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/new-10000-seat-stadium-jewel-14959193

You can be fined £5,000 driving deliberately through a puddle and splashing pedestrians

from Surrey Live - News https://www.getsurrey.co.uk/news/uk-world-news/you-can-fined-5000-driving-14968085

Environment Agency grants permission for new oil well at Leith Hill and campaigners are furious

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/environment-agency-grants-permission-new-14954907

Chakra now open in Kingston and Kensington - here's what to expect at charming Indian restaurant

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/food-drink-news/chakra-now-open-kingston-kensington-14968361

Trial of men accused of smuggling £50 million of cocaine into Farnborough Airport begins

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/farnborough-airport-cocaine-trial-50-14969307

Trial of men accused of smuggling £15 million of cocaine into Farnborough Airport begins

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/trial-men-accused-smuggling-15-14969307

Woman, 36, to stand trial following death of two horses in Normandy car crash

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/woman-36-stand-trial-following-14967645

Two children suffer 'serious injuries' after crash in Shalford

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/two-children-suffer-serious-injuries-14967671

Gang members jailed for total of 35 years for selling cocaine and heroin in Aldershot

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/aldershot-gang-members-jailed-cocaine-14962024

Can you help find missing and 'extremely vulnerable' Guildford teenagers Emily Carter-Muall and Morgan Essex

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/can-you-help-find-missing-14967300

Sunday, July 29, 2018

Take a look inside the brand new leisure centre Woking Sportsbox

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/take-look-inside-brand-new-14959630

How heart transplant saved Surrey man's life so he could go on to win medal for Team GB

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/how-heart-transplant-saved-surrey-14946877

Listen to this beautiful and moving piano performance of Chopin by 104-year-old man

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/listen-beautiful-moving-piano-performance-14950864

RideLondon Surrey 100: 10 great photos show dedicated cyclists and cheering crowds defy torrential rain

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/ridelondon-surrey-100-10-great-14966552

Appeal to raise £2,000 for Tiny Timmy after he is rescued with 37 other Chihuahuas from home near Woking

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/appeal-raise-2000-tiny-timmy-14955638

Crowdfunding page set up to thank Hartley Wintney firefighters reaches target in 2 hours

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/crowdfunding-page-set-up-thank-14956970

Thames Water's massive leaks and huge dividends show 'broken system', says GMB

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/thames-waters-massive-leaks-huge-14952025

Wildfire in Crondall inspires heart-warming photos of two boys offering firefighters water while they work

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/wildfire-crondall-inspires-heart-warming-14960598

Saturday, July 28, 2018

Oxshott school project to 'join up' village to create safer environment for pupils and parents

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/oxshott-school-project-join-up-14947165

The Star pub in Witley is looking for a new landlord to take over the business

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/star-pub-witley-looking-new-14930475

Chessington World of Adventures reopens and residents' water restored after burst water pipe

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/chessington-world-adventures-reopens-residents-14964033

Medicinal cannabis will be available on prescription by autumn

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/medicinal-cannabis-available-prescription-autumn-14958874

South Western Railway strike: Union members 'standing rock solid' as second day of industrial action gets underway

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/south-western-railway-strike-union-14963543

Manhunt launched to find suspect wanted after robbery of 84-year-old who later died in hospital

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/manhunt-launched-find-suspect-wanted-14963221

Chertsey, Painshill and Banstead fire stations closed on Saturday July 28

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/chertsey-painshill-banstead-fire-stations-14959761

Gang members jailed for total of 35 years for selling cocaine and heroin in Aldershot

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/gang-members-jailed-total-35-14962024

Missing Addlestone woman Samantha Nicholls-Juett found 'safe and well'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/missing-addlestone-woman-samantha-nicholls-14963068

Ewell stabbing: Man hospitalised with 'serious injuries' after being attacked by masked gang

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/ewell-stabbing-man-hospitalised-serious-14962966

Friday, July 27, 2018

Does RideLondon benefit Mole Valley or is it a nuisance? We asked Dorking businesses

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/ridelondon-benefit-mole-valley-nuisance-14940170

Vanished without trace: Five people who are still missing from Surrey years after they disappeared

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/vanished-without-trace-five-people-14945834

24 places for a fun or foodie first date in Surrey

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/whats-on-news/valentines-day-surrey-24-places-14139854

12 luxurious ways to treat yourself in Surrey

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/whats-on-news/10-luxurious-ways-treat-yourself-12309150

29 luxury hotels in Surrey and Hampshire worth splashing out on

from Surrey Live - News https://www.getsurrey.co.uk/lifestyle/luxury-hotels-in-surrey-stay-8957988

Man leaves store empty-handed after attempted armed robbery in West Molesey

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/man-leaves-store-empty-handed-14961451

Woking Food and Drink Festival chocolatier explains how to make chocolate at home

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/food-drink-news/woking-food-drink-festival-chocolatier-14912550

Aldershot Silent Solder memorial stolen just two weeks after it was installed

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/aldershot-silent-solder-memorial-stolen-14956340

Horley 'balaclava man' found to be a hoax as girls admit they made it up

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/horley-balaclava-man-found-hoax-14961555

RideLondon weather forecast for Surrey 100 cycling event on Sunday

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/ridelondon-weather-forecast-surrey-100-14958712

Does Surrey's fire service have a chief officer? County council refuses to say

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/surreys-fire-service-chief-officer-14961249

Surrey supermarket fridges breaking down in the heatwave

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/surrey-supermarket-fridges-breaking-down-14959970

Pictures show how heatwave has made it England's brown and pleasant land

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/pictures-show-how-heatwave-made-14959791

Met Office map shows which parts of Surrey could be in for 'heavy thunderstorms' on Friday

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/met-office-map-shows-parts-14959336

New lamp post removed from pedestrianised Tunsgate Quarter after being 'hit by lorry'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/new-lamp-post-removed-pedestrianised-14955894

Chessington World of Adventures to open late as burst pipe causes water shortage

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/chessington-world-adventures-open-late-14958798

South Western Railway warns RideLondon cyclists 'not to travel with bikes' on strike Saturday

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/south-western-railway-warns-ridelondon-14955232

Using the Flowchart Method for Diagnosing Ranking Drops - Whiteboard Friday

Posted by KameronJenkins

Being able to pinpoint the reason for a ranking drop is one of our most perennial and potentially frustrating tasks as SEOs. There are an unknowable number of factors that go into ranking these days, but luckily the methodology for diagnosing those fluctuations is readily at hand. In today's Whiteboard Friday, we welcome the wonderful Kameron Jenkins to show us a structured way to diagnose ranking drops using a flowchart method and critical thinking.

Click on the whiteboard image above to open a high-resolution version in a new tab!

Video Transcription

Hey, everyone. Welcome to this week's edition of Whiteboard Friday. My name is Kameron Jenkins. I am the new SEO Wordsmith here at Moz, and I'm so excited to be here. Before this, I worked at an agency for about six and a half years. I worked in the SEO department, and really a common thing we encountered was a client's rankings dropped. What do we do?

This flowchart was kind of built out of that mentality of we need a logical workflow to be able to diagnose exactly what happened so we can make really pointed recommendations for how to fix it, how to get our client's rankings back. So let's dive right in. It's going to be a flowchart, so it's a little nonlinear, but hopefully this makes sense and helps you work smarter rather than harder.

Was it a major ranking drop?: No

The first question I'd want to ask is: Was their rankings drop major? By major, I would say that's something like page 1 to page 5 overnight. Minor would be something like it just fell a couple positions, like position 3 to position 5.

We're going to take this path first. It was minor.

We're going to take this path first. It was minor.

Has there been a pattern of decline lasting about a month or greater?

That's not a magic number. A month is something that you can use as a benchmark. But if there's been a steady decline and it's been one week it's position 3 and then it's position 5 and then position 7, and it just keeps dropping over time, I would consider that a pattern of decline.

So if no, I would actually say wait.

- Volatility is normal, especially if you're at the bottom of page 1, maybe page 2 plus. There's going to be a lot more shifting of the search results in those positions. So volatility is normal.

- Keep your eyes on it, though. It's really good to just take note of it like, "Hey, we dropped. Okay, I'm going to check that again next week and see if it continues to drop, then maybe we'll take action."

- Wait it out. At this point, I would just caution against making big website updates if it hasn't really been warranted yet. So volatility is normal. Expect that. Keep your finger on the pulse, but just wait it out at this point.

If there has been a pattern of decline though, I'm going to have you jump to the algorithm update section. We're going to get there in a second. But for now, we're going to go take the major rankings drop path.

Was it a major ranking drop?: Yes

The first question on this path that I'd want to ask is:

The first question on this path that I'd want to ask is:

Was there a rank tracking issue?

Now, some of these are going seem pretty basic, like how would that ever happen, but believe me it happens every once in a while. So just before we make major updates to the website, I'd want to check the rank tracking.

I. The wrong domain or URL.

That can be something that happens a lot. A site maybe you change domains or maybe you move a page and that old page of that old domain is still listed in your ranking tracker. If that's the case, then the rank tracking tool doesn't know which URL to judge the rankings off of. So it's going to look like maybe you dropped to position 10 overnight from position 1, and that's like, whoa, that's a huge update. But it's actually just that you have the wrong URL in there. So just check that. If there's been a page update, a domain update, check to make sure that you've updated your rank tracker.

II. Glitches.

So it's software, it can break. There are things that could cause it to be off for whatever reason. I don't know how common that is. It probably is totally dependent on which kind of software you use. But glitches do happen, so I would manually check your rankings.

III. Manually check rankings.

One way I would do that is...

- Go to incognito in Google and make sure you're logged out so it's not personalized. I would search the term that you're wanting to rank for and see where you're actually ranking.

- Google's Ad Preview tool. That one is really good too if you want to search where you're ranking locally so you can set your geolocation. You could do mobile versus desktop rankings. So it could be really good for things like that.

- Crosscheck with another tool, like Moz's tool for rank tracking. You can pop in your URLs, see where you're ranking, and cross-check that with your own tool.

So back to this. Rank tracking issues. Yes, you found your problem. If it was just a rank tracking tool issue, that's actually great, because it means you don't have to make a lot of changes. Your rankings actually haven't dropped. But if that's not the issue, if there is no rank tracking issue that you can pinpoint, then I would move on to Google Search Console.

Problems in Google Search Console?

So Google Search Console is really helpful for checking site health matters. One of the main things I would want to check in there, if you experience a major drop especially, is...

I. Manual actions.

If you navigate to Manual Actions, you could see notes in there like unnatural links pointing to your site. Or maybe you have thin or low-quality content on your site. If those things are present in your Manual Actions, then you have a reference point. You have something to go off of. There's a lot of work involved in lifting a manual penalty that we can't get into here unfortunately. Some things that you can do to focus on manual penalty lifting...

- Moz's Link Explorer. You can check your inbound links and see their spam score. You could look at things like anchor text to see if maybe the links pointing to your site are keyword stuffed. So you can use tools like that.

- There are a lot of good articles too, in the industry, just on getting penalties lifted. Marie Haynes especially has some really good ones. So I would check that out.

But you have found your problem if there's a manual action in there. So focus on getting that penalty lifted.

II. Indexation issues.

Before you move out of Search Console, though, I would check indexation issues as well. Maybe you don't have a manual penalty. But go to your index coverage report and you can see if anything you submitted in your sitemap is maybe experiencing issues. Maybe it's blocked by robots.txt, or maybe you accidentally no indexed it. You could probably see that in the index coverage report. Search Console, okay. So yes, you found your problem. No, you're going to move on to algorithm updates.

Algorithm updates

Algorithm updates happen all the time. Google says that maybe one to two happen per day. Not all of those are going to be major. The major ones, though, are listed. They're documented in multiple different places. Moz has a really good list of algorithm updates over time. You can for sure reference that. There are going to be a lot of good ones. You can navigate to the exact year and month that your site experienced a rankings drop and see if it maybe correlates with any algorithm update.

For example, say your site lost rankings in about January 2017. That's about the time that Google released its Intrusive Interstitials Update, and so I would look on my site, if that was the issue, and say, "Do I have intrusive interstitials? Is this something that's affecting my website?"

If you can match up an algorithm update with the time that your rankings started to drop, you have direction. You found an issue. If you can't match it up to any algorithm updates, it's finally time to move on to site updates.

Site updates

What changes happened to your website recently? There are a lot of different things that could have happened to your website. Just keep in mind too that maybe you're not the only one who has access to your website. You're the SEO, but maybe tech support has access. Maybe even your paid ad manager has access. There are a lot of different people who could be making changes to the website. So just keep that in mind when you're looking into it. It's not just the changes that you made, but changes that anyone made could affect the website's ranking. Just look into all possible factors.

Other factors that can impact rankings

A lot of different things, like I said, can influence your site's rankings. A lot of things can inadvertently happen that you can pinpoint and say, "Oh, that's definitely the cause."

Some examples of things that I've personally experienced on my clients' websites...

I. Renaming pages and letting them 404 without updating with a 301 redirect.

There was one situation where a client had a blog. They had hundreds of really good blog posts. They were all ranking for nice, long tail terms. A client emailed into tech support to change the name of the blog. Unfortunately, all of the posts lived under the blog, and when he did that, he didn't update it with a 301 redirect, so all of those pages, that were ranking really nicely, they started to fall out of the index. The rankings went with it. There's your problem. It was unfortunate, but at least we were able to diagnose what happened.

II. Content cutting.

Maybe you're working with a UX team, a design team, someone who is looking at the website from a visual, a user experience perspective. A lot of times in these situations they might take a page that's full of really good, valuable content and they might say, "Oh, this is too clunky. It's too bulky. It has too many words. So we're going to replace it with an image, or we're going to take some of the content out."

When this happens, if the content was the thing that was making your page rank and you cut that, that's probably something that's going to affect your rankings negatively. By the way, if that's happening to you, Rand has a really good Whiteboard Friday on kind of how to marry user experience and SEO. You should definitely check that out if that's an issue for you.

III. Valuable backlinks lost.

Another situation I was diagnosing a client and one of their backlinks dropped. It just so happened to be like the only thing that changed over this course of time. It was a really valuable backlink, and we found out that they just dropped it for whatever reason, and the client's rankings started to decline after that time. Things like Moz's tools, Link Explorer, you can go in there and see gained and lost backlinks over time. So I would check that out if maybe that might be an issue for you.

IV. Accidental no index.

Depending on what type of CMS you work with, it might be really, really easy to accidentally check No Index on this page. If you no index a really important page, Google takes it out of its index. That could happen. Your rankings could drop.So those are just some examples of things that can happen. Like I said, hundreds and hundreds of things could have been changed on your site, but it's just really important to try to pinpoint exactly what those changes were and if they coincided with when your rankings started to drop.

SERP landscape

So we got all the way to the bottom. If you're at the point where you've looked at all of the site updates and you still haven't found anything that would have caused a rankings drop, I would say finally look at the SERP landscape.

What I mean by that is just Google your keyword that you want to rank for or your group of keywords that you want to rank for and see which websites are ranking on page 1. I would get a lay of the land and just see:

- What are these pages doing?

- How many backlinks do they have?

- How much content do they have?

- Do they load fast?

- What's the experience?

Then make content better than that. To rank, so many people just think avoid being spammy and avoid having things broken on your site. But that's not SEO. That's really just helping you be able to compete. You have to have content that's the best answer to searchers' questions, and that's going to get you ranking.

I hope that was helpful. This is a really good way to just kind of work through a ranking drop diagnosis. If you have methods, by the way, that work for you, I'd love to hear from you and see what worked for you in the past. Let me know, drop it in the comments below.

Thanks, everyone. Come back next week for another edition of Whiteboard Friday.

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from The Moz Blog http://tracking.feedpress.it/link/9375/9869223

Oxted snowboard champion Ellie Soutter dies on 18th birthday

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/oxted-snowboard-champion-ellie-soutter-14958297

Quorn gluten free burgers recalled as they contain 'undeclared gluten'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/quorn-gluten-free-burgers-recalled-14958323

Thursday, July 26, 2018

A23 Hooley widening plan could kill businesses, says trader as more details emerge

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/a23-hooley-widening-plan-could-14956455

'Omissions' in recording of mental health reports 'did not cause or contribute to' patient's suicide

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/omissions-recording-mental-health-reports-14946781

Investigation into threats sent to geese cull primary school has been shelved by police

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/investigation-threats-sent-geese-cull-14949089

How to optimize WordPress after running a page speed test

If you’re serious about your WordPress website, you have run a page speed test at some point. There are many variations of these tests out there. Some more convenient and true to your target audience than others. But they all will give you a pretty decent idea of where you can still improve your site.

Certain speed optimizations may come across as “technically challenging” for some of you. Luckily, you have set up a WordPress website. And one of the things that make WordPress so awesome is the availability of WordPress plugins. Some free, some paid, but they all will help you to simplify difficult tasks. In this article, we’ll first show you a couple of page speed tests so you can check your page speed yourself. After that, we’ll go into a number of speed optimization recommendations. And show you how to solve these using just plugins.

Running a page speed test

Running a page speed test is as simple as inserting your website’s URL into a form on a website. That website then analyzes your website and comes up with recommendations. I’d like to mention two of those, but there are much more tests available.

- Pingdom provides a tool for speed testing. The nice thing is that you can test from different servers. For instance, from a server that is relatively close to you. Especially if you are targeting a local audience, this is a nice way to see how fast your website for them is.

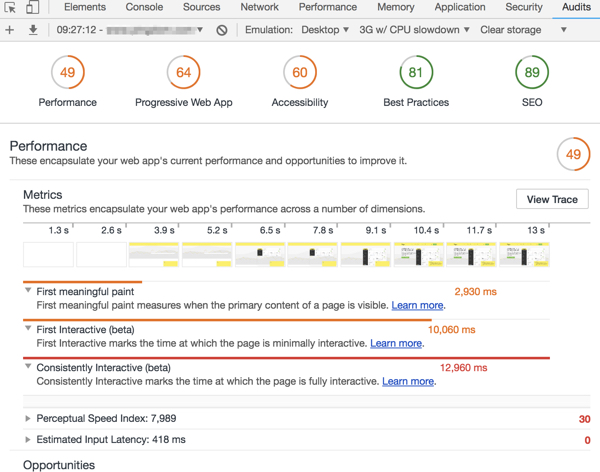

- Google Lighthouse is a performance tool that lives in your browser. Click right on a page, choose Inspect and check the Audits tab in the new window that opens in your browser. Here, you can test speed for mobile device or desktop, and on different bandwidths for example. The test result looks like this:

Small remark: most sites appear slower in Lighthouse. This is because Lighthouse emulates a number of devices, for instance, a slow mobile/3g connection. (see the second bar in the screenshot above). With mobile first, this is actually a good thing, right?

Before Lighthouse, Google PageSpeed Insights already showed us a lot of speed improvements. They even let you download of optimized images, CSS and JS files. As you are working with WordPress, it might be a hard task to replace your files with these optimized ones though. Luckily, WordPress has plugins.

There are many, many more speed testing tools available online. These are just a few that I wanted to mention before going into WordPress solutions that will help you improve speed.

Optimizing your page speed using WordPress plugins

After running a page speed test, I am pretty sure that most website owners feel they should invest some time into optimizing that speed for their website. You will have a dozen recommendations. These recommendations differ from things you can do yourselves and some things that you might need technical help for.

Image optimization

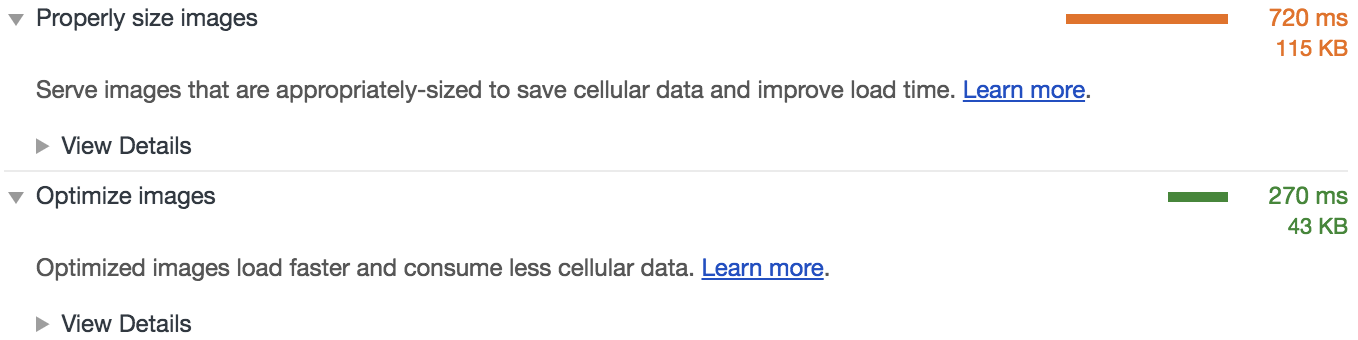

Your speed test might return this recommendation:

Images usually play a large part in speed optimization, especially if you use large header images. Or if your site is image-heavy overall. It’s always a good idea to optimize these images. And it can be done with little quality loss these days. One of the things to look for is, like in the page speed test example above, images that are in fact larger than they are shown on your screen. If you have an image that covers your entire screen, and squeeze that into a 300 x 200 pixels spot on your website, you might be using an image of several MB’s. Instead, you could also change the dimensions of your image before uploading. And serve the image in the right dimensions and at a file size of some KB’s instead. By reducing the file size, you are speeding up your website.

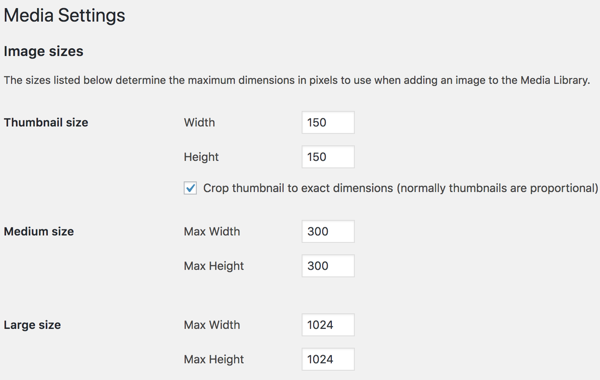

Setting image dimensions in WordPress

WordPress comes with a handy default feature, where every image you upload is stored in several dimensions:

So if you want all the images in your posts to be the same width, pick one of the predefined ones or set your custom dimensions here. Images that you upload scale accordingly to these dimensions and the image in the original dimensions will also be available for you.

If you load, for instance, the medium size image instead of the much larger original, this will serve an image in a smaller file size, and this will be faster.

Image optimization plugins

There are also a number of image optimization plugins (paid and free) for WordPress available, like Kraken.io, Smush or Imagify. These might, for instance, remove so-called Exif data from the image. That is data that is really interesting for a photographer and will contain information about what settings the camera used to make that photo. Not really something you need for the image in your blog post, unless perhaps if you are in fact a photographer. Depending on your settings, you could also have these plugins replace your image with an image that is slightly lower in quality, for instance.

Some of these aforementioned plugins can also help you resize your images, by the way. Test these plugins for yourself and see which one is most convenient to work with and minifies your image files the best way. For further reading about image optimization, be sure to check this post about image SEO.

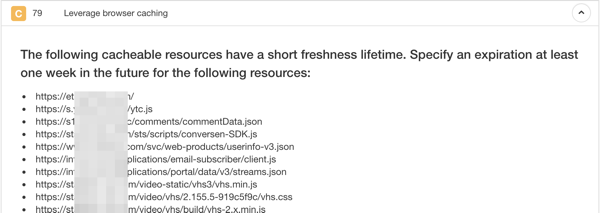

Browser cache

Another issue that comes across a lot in page speed tests is browser cache optimization.

Browser cache is about storing website files, like JS and CSS, in your local temporary internet files folder, so that they can be retrieved quickly on your next visit. Or, as Mozilla puts it:

The Firefox cache temporarily stores images, scripts, and other parts of websites you visit in order to speed up your browsing experience.

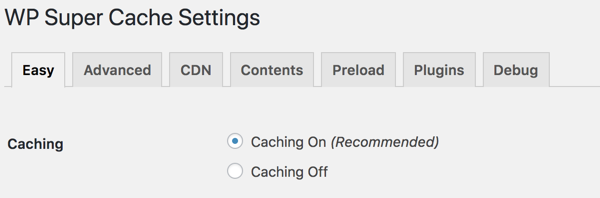

Caching in WP Super Cache

Most speed optimization plugins help you to optimize this caching. Sometimes as simple as this:

The Advanced tab of WP Super Cache here has a lot of more in-depth configuration for that, but starting out with the set defaults of a plugin is usually a good start. After that, start tweaking these advanced settings and see what they do.

Note that WP Super Cache has an option to disable cache for what they call “known users”. These are logged in users (and commenters), which allows for development (or commenting) without caching. That means for every refresh of the website in the browser window, you will get the latest state of that website instead of a cached version. That last one might be older because of that expiration time. If you set that expiration time to say 3600 seconds, a browser will only check for changes of the cached website after an hour. You see how that can be annoying if you want to see, for instance, design changes right away while developing.

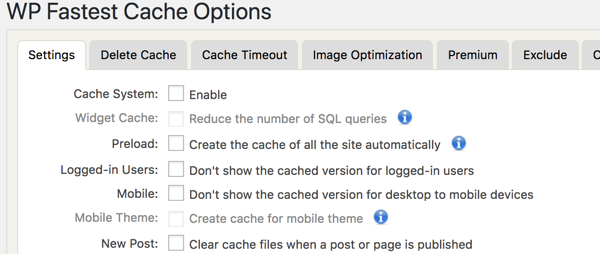

Other WordPress caching plugins

I mention WP Super Cache here because it’s free and easy to use for most users. But there are alternatives. WP Fastest Cache is popular as well, with over 600K+ active installs. It has similar features to optimize caching:

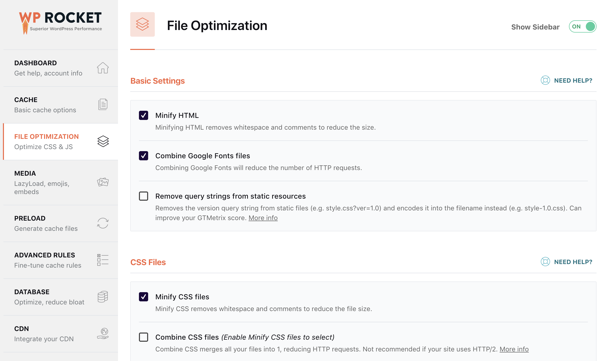

A paid plugin that I also like is WP Rocket. It’s so easy to configure, that you’ll wonder if you have done things right. But your page speed test will tell you that it works pretty much immediately straight out-of-the-box. Let me explain something about compression and show you WP Rockets settings for that.

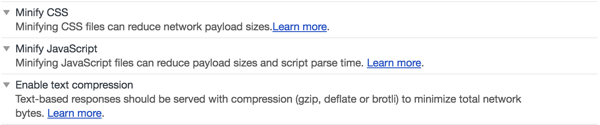

Compression

Regardless of whether your page speed test tool tells you to:

- Try to minify your CSS files,

- minify the JS files of your site,

- minify your HTML files or

- enable (GZIP) compression

These recommendations are all compression related. It’s about making your files as small as possible before sending them to a browser. It’s like reducing the file size of your images, but for JavaScript or CSS files, or for instance your HTML file itself. GZIP compression is about sending a zipped file to your browser, that your browser can unzip and read. Recommendations may look like this:

In WP Rocket, the settings for compression look like this:

Again, a lot is set to the right settings by default, as we do in Yoast SEO, but even more can be configured to your needs. How well compression works, might depend on your server settings as well.

If you feel like the compression optimization that is done with any of the plugins mentioned above fails, contact your hosting company and see if and how they can help you configure compression for your website. They will surely be able to help you out, especially when you are using one of these WordPress hosting companies.

Serving CSS and JS files

One more thing that speed tests will tell you, is to combine (external) CSS or JavaScript files or defer parsing scripts. These recommendations are about the way these files are served to the website.

The combine option for these files is, like you can see in the WP Rocket screenshot above, not recommended for HTTP/2 websites. For these websites, multiple script files can be loaded at the same time. For non-HTTP/2 sites, combining these files will lower the number of server requests, which again makes your site faster.

Deferring scripts or recommendations like “Eliminate render-blocking JavaScript and CSS in above-the-fold content” are about the way these scripts are loaded in your template files. If all of these are served from the top section of your template, your browser will wait to show (certain elements of) your page until these files are fully loaded. Sometimes it pays to transfer less-relevant scripts to the footer of your template, so your browser will first show your website. It can add the enhancements that these JavaScripts or CSS files make later. A plugin that can help you with this is Scripts-to-Footer. Warning: test this carefully. If you change the way that these files load, this can impact your website. Things may all of a sudden stop working or look different.

We have to mention CDNs

A Content Delivery Network caches static content. With static content, we mean files like HTML, CSS, JavaScript and image files. These files don’t change that often, so we can serve them from a CDN with many servers that are located near your visitors, so you can get them to your visitors super fast. It’s like traveling: the shorter the trip, the faster you get to your destination. Common sense, right? The same goes for these files. If the server that is serving the static file is located near your visitor (and servers are equally fast, obviously), the site will load faster for that visitor. Please read this post if you want to know more about CDNs.

There are many ways to optimize page speed in WordPress

Page speed tests will give you even more recommendations. Again, you might not be able to follow up on all of these yourself. Be sure to ask your expert in that case, like your web developer or agency, or your hosting company. But in the end, it’s good that you are using WordPress. There are many decent plugins that can help you optimize the speed of your website after a page speed test!

Read more: Site speed: tools and suggestions »

The post How to optimize WordPress after running a page speed test appeared first on Yoast.

from Yoast • SEO for everyone https://yoast.com/how-to-optimize-wordpress-after-running-a-page-speed-test/

Man set fire to Redhill YMCA room in 'reckless' attempt to avoid becoming homeless

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/man-set-fire-redhill-ymca-14951539

Two motorists guilty of causing death of former Gurkha's wife by careless driving

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/death-careless-driving-radhika-gurung-14957113

Young girls 'chased across park in Horley by man in balaclava'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/young-girls-chased-across-park-14957018

Addlestone missing woman Samantha Nicholls-Juett does not have medication with her

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/addlestone-missing-woman-samantha-nicholls-14956751

22 places in Surrey every cheese and wine lover must visit

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/food-drink-news/22-delicious-places-surrey-every-12541374

23 nail salons in Surrey ideal for treating yourself to a manicure

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/family-kids-news/mothers-day-nail-salon-deals-14297077

Farnborough Airshow responds to critics following string of negative comments about this year's event

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/farnborough-airshow-responds-critics-following-14949812

Affordable yet charming places to stay in and around Surrey if you're on a budget

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/affordable-yet-charming-places-stay-10870387

Woking FC stadium in huge 10,000-seat overhaul with 1,000 new apartments

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/woking-fc-stadium-huge-10000-14956148

Hottest day of the year confirmed - yes, it's soared to 34.1°C in Reigate!

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/hottest-day-year-confirmed-yes-14955881

Trial date set for three men accused of murdering Walton fisherman Scott Wilkinson

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/trial-date-set-three-men-14954808

What are T levels and why doesn't Guildford MP Anne Milton want her children to study them?

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/what-levels-doesnt-guildford-mp-14925414

Two men left with serious injuries after Stanwell Moor 'assault'

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/two-men-left-serious-injuries-14951305

Wyevale Garden Centres including £35m flagship store up for sale as part of national plan

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/wyevale-garden-centres-including-35m-14949613

Body of Christopher Wade found in Farnborough woodland almost two weeks after he went missing

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/body-christopher-wade-found-farnborough-14954755

Man who police want to speak to about 'serious assault' could be in Woking

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/man-who-police-want-speak-14954423

easyJet announces more than 380 cabin crew jobs up for grabs at Gatwick Airport

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/easyjet-jobs-gatwick-airport-flight-14953886

22 beaches in easy reach of Surrey (including a few secret ones, shhh!)

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/family-kids-news/nearest-sandy-beaches-to-surrey-9833696

Thunderstorms forecast to break up the heatwave, but will they hit Surrey?

from Surrey Live - News https://www.getsurrey.co.uk/news/uk-world-news/thunderstorms-forecast-break-up-heatwave-14951496

Furious residents denied chance to address open forum on Staines Park's future

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/furious-residents-denied-chance-address-14946845

Here's why the Red Arrows will never perform an acrobatic display at Farnborough Airshow again

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/heres-red-arrows-never-perform-14952416

Here's why the Red Arrows will never perform an acrobatic display at Farnborough Airshow again

from Surrey Live - News https://www.getsurrey.co.uk/news/hampshire-news/red-arrows-farnborough-airshow-perform-14952416

Best fitness and wellness festivals to hit in 2018

from Surrey Live - News https://www.getsurrey.co.uk/whats-on/whats-on-news/best-fitness-wellness-festivals-hit-14197733

Surrey firefighters 'bailed out' by London colleagues on another day of crew shortages

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/surrey-firefighters-bailed-out-london-14952594

South Western Railway strike - how to claim refund if you arrive 15 minutes late

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/south-western-railway-strike-how-14953635

Unauthorised traveller encampment in Row Town served first notice to leave

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/unauthorised-traveller-encampment-row-town-14952769

Wednesday, July 25, 2018

Four-year-old girl told she must leave dance group after dispute between family and organisers

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/four-year-old-girl-told-14929747

More parents are being prosecuted for unauthorised school absences, here's the number in Surrey

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/more-parents-being-prosecuted-unauthorised-14947183

Network Rail blamed for large percentage of South Western Railway disruption, report reveals

from Surrey Live - News https://www.getsurrey.co.uk/news/surrey-news/network-rail-blamed-large-percentage-14946796

Rewriting the Beginner's Guide to SEO, Chapter 2: Crawling, Indexing, and Ranking

Posted by BritneyMuller

It's been a few months since our last share of our work-in-progress rewrite of the Beginner's Guide to SEO, but after a brief hiatus, we're back to share our draft of Chapter Two with you! This wouldn’t have been possible without the help of Kameron Jenkins, who has thoughtfully contributed her great talent for wordsmithing throughout this piece.

This is your resource, the guide that likely kicked off your interest in and knowledge of SEO, and we want to do right by you. You left amazingly helpful commentary on our outline and draft of Chapter One, and we'd be honored if you would take the time to let us know what you think of Chapter Two in the comments below.

Chapter 2: How Search Engines Work – Crawling, Indexing, and Ranking

First, show up.

As we mentioned in Chapter 1, search engines are answer machines. They exist to discover, understand, and organize the internet's content in order to offer the most relevant results to the questions searchers are asking.

In order to show up in search results, your content needs to first be visible to search engines. It's arguably the most important piece of the SEO puzzle: If your site can't be found, there's no way you'll ever show up in the SERPs (Search Engine Results Page).

How do search engines work?

Search engines have three primary functions:

- Crawl: Scour the Internet for content, looking over the code/content for each URL they find.

- Index: Store and organize the content found during the crawling process. Once a page is in the index, it’s in the running to be displayed as a result to relevant queries.

- Rank: Provide the pieces of content that will best answer a searcher's query. Order the search results by the most helpful to a particular query.

What is search engine crawling?

Crawling, is the discovery process in which search engines send out a team of robots (known as crawlers or spiders) to find new and updated content. Content can vary — it could be a webpage, an image, a video, a PDF, etc. — but regardless of the format, content is discovered by links.

The bot starts out by fetching a few web pages, and then follows the links on those webpages to find new URLs. By hopping along this path of links, crawlers are able to find new content and add it to their index — a massive database of discovered URLs — to later be retrieved when a searcher is seeking information that the content on that URL is a good match for.

What is a search engine index?

Search engines process and store information they find in an index, a huge database of all the content they’ve discovered and deem good enough to serve up to searchers.

Search engine ranking

When someone performs a search, search engines scour their index for highly relevant content and then orders that content in the hopes of solving the searcher's query. This ordering of search results by relevance is known as ranking. In general, you can assume that the higher a website is ranked, the more relevant the search engine believes that site is to the query.

It’s possible to block search engine crawlers from part or all of your site, or instruct search engines to avoid storing certain pages in their index. While there can be reasons for doing this, if you want your content found by searchers, you have to first make sure it’s accessible to crawlers and is indexable. Otherwise, it’s as good as invisible.

By the end of this chapter, you’ll have the context you need to work with the search engine, rather than against it!

Note: In SEO, not all search engines are equal

Many beginners wonder about the relative importance of particular search engines. Most people know that Google has the largest market share, but how important it is to optimize for Bing, Yahoo, and others? The truth is that despite the existence of more than 30 major web search engines, the SEO community really only pays attention to Google. Why? The short answer is that Google is where the vast majority of people search the web. If we include Google Images, Google Maps, and YouTube (a Google property), more than 90% of web searches happen on Google — that's nearly 20 times Bing and Yahoo combined.

Crawling: Can search engines find your site?

As you've just learned, making sure your site gets crawled and indexed is a prerequisite for showing up in the SERPs. First things first: You can check to see how many and which pages of your website have been indexed by Google using "site:yourdomain.com", an advanced search operator.

Head to Google and type "site:yourdomain.com" into the search bar. This will return results Google has in its index for the site specified:

The number of results Google displays (see “About __ results” above) isn't exact, but it does give you a solid idea of which pages are indexed on your site and how they are currently showing up in search results.

For more accurate results, monitor and use the Index Coverage report in Google Search Console. You can sign up for a free Google Search Console account if you don't currently have one. With this tool, you can submit sitemaps for your site and monitor how many submitted pages have actually been added to Google's index, among other things.

If you're not showing up anywhere in the search results, there are a few possible reasons why:

- Your site is brand new and hasn't been crawled yet.

- Your site isn't linked to from any external websites.

- Your site's navigation makes it hard for a robot to crawl it effectively.

- Your site contains some basic code called crawler directives that is blocking search engines.

- Your site has been penalized by Google for spammy tactics.

If your site doesn't have any other sites linking to it, you still might be able to get it indexed by submitting your XML sitemap in Google Search Console or manually submitting individual URLs to Google. There's no guarantee they'll include a submitted URL in their index, but it's worth a try!

Can search engines see your whole site?

Sometimes a search engine will be able to find parts of your site by crawling, but other pages or sections might be obscured for one reason or another. It's important to make sure that search engines are able to discover all the content you want indexed, and not just your homepage.

Ask yourself this: Can the bot crawl through your website, and not just to it?

Is your content hidden behind login forms?

If you require users to log in, fill out forms, or answer surveys before accessing certain content, search engines won't see those protected pages. A crawler is definitely not going to log in.

Are you relying on search forms?

Robots cannot use search forms. Some individuals believe that if they place a search box on their site, search engines will be able to find everything that their visitors search for.

Is text hidden within non-text content?

Non-text media forms (images, video, GIFs, etc.) should not be used to display text that you wish to be indexed. While search engines are getting better at recognizing images, there's no guarantee they will be able to read and understand it just yet. It's always best to add text within the <HTML> markup of your webpage.

Can search engines follow your site navigation?

Just as a crawler needs to discover your site via links from other sites, it needs a path of links on your own site to guide it from page to page. If you’ve got a page you want search engines to find but it isn’t linked to from any other pages, it’s as good as invisible. Many sites make the critical mistake of structuring their navigation in ways that are inaccessible to search engines, hindering their ability to get listed in search results.

Common navigation mistakes that can keep crawlers from seeing all of your site:

- Having a mobile navigation that shows different results than your desktop navigation

- Any type of navigation where the menu items are not in the HTML, such as JavaScript-enabled navigations. Google has gotten much better at crawling and understanding Javascript, but it’s still not a perfect process. The more surefire way to ensure something gets found, understood, and indexed by Google is by putting it in the HTML.

- Personalization, or showing unique navigation to a specific type of visitor versus others, could appear to be cloaking to a search engine crawler

- Forgetting to link to a primary page on your website through your navigation — remember, links are the paths crawlers follow to new pages!

This is why it's essential that your website has a clear navigation and helpful URL folder structures.

Information architecture

Information architecture is the practice of organizing and labeling content on a website to improve efficiency and fundability for users. The best information architecture is intuitive, meaning that users shouldn't have to think very hard to flow through your website or to find something.

Your site should also have a useful 404 (page not found) page for when a visitor clicks on a dead link or mistypes a URL. The best 404 pages allow users to click back into your site so they don’t bounce off just because they tried to access a nonexistent link.

Tell search engines how to crawl your site

In addition to making sure crawlers can reach your most important pages, it’s also pertinent to note that you’ll have pages on your site you don’t want them to find. These might include things like old URLs that have thin content, duplicate URLs (such as sort-and-filter parameters for e-commerce), special promo code pages, staging or test pages, and so on.

Blocking pages from search engines can also help crawlers prioritize your most important pages and maximize your crawl budget (the average number of pages a search engine bot will crawl on your site).

Crawler directives allow you to control what you want Googlebot to crawl and index using a robots.txt file, meta tag, sitemap.xml file, or Google Search Console.

Robots.txt

Robots.txt files are located in the root directory of websites (ex. yourdomain.com/robots.txt) and suggest which parts of your site search engines should and shouldn't crawl via specific robots.txt directives. This is a great solution when trying to block search engines from non-private pages on your site.

You wouldn't want to block private/sensitive pages from being crawled here because the file is easily accessible by users and bots.

Pro tip:

- If Googlebot can't find a robots.txt file for a site (40X HTTP status code), it proceeds to crawl the site.

- If Googlebot finds a robots.txt file for a site (20X HTTP status code), it will usually abide by the suggestions and proceed to crawl the site.

- If Googlebot finds neither a 20X or a 40X HTTP status code (ex. a 501 server error) it can't determine if you have a robots.txt file or not and won't crawl your site.

Meta directives

The two types of meta directives are the meta robots tag (more commonly used) and the x-robots-tag. Each provides crawlers with stronger instructions on how to crawl and index a URL's content.

The x-robots tag provides more flexibility and functionality if you want to block search engines at scale because you can use regular expressions, block non-HTML files, and apply sitewide noindex tags.

These are the best options for blocking more sensitive*/private URLs from search engines.

*For very sensitive URLs, it is best practice to remove them from or require a secure login to view the pages.

WordPress Tip: In Dashboard > Settings > Reading, make sure the "Search Engine Visibility" box is not checked. This blocks search engines from coming to your site via your robots.txt file!

Avoid these common pitfalls, and you'll have clean, crawlable content that will allow bots easy access to your pages.

Once you’ve ensured your site has been crawled, the next order of business is to make sure it can be indexed. That’s right — just because your site can be discovered and crawled by a search engine doesn’t necessarily mean that it will be stored in their index. Read on to learn about how indexing works and how you can make sure your site makes it into this all-important database.

Sitemaps

A sitemap is just what it sounds like: a list of URLs on your site that crawlers can use to discover and index your content. One of the easiest ways to ensure Google is finding your highest priority pages is to create a file that meets Google's standards and submit it through Google Search Console. While submitting a sitemap doesn’t replace the need for good site navigation, it can certainly help crawlers follow a path to all of your important pages.

Google Search Console

Some sites (most common with e-commerce) make the same content available on multiple different URLs by appending certain parameters to URLs. If you’ve ever shopped online, you’ve likely narrowed down your search via filters. For example, you may search for “shoes” on Amazon, and then refine your search by size, color, and style. Each time you refine, the URL changes slightly. How does Google know which version of the URL to serve to searchers? Google does a pretty good job at figuring out the representative URL on its own, but you can use the URL Parameters feature in Google Search Console to tell Google exactly how you want them to treat your pages.

Indexing: How do search engines understand and remember your site?

Once you’ve ensured your site has been crawled, the next order of business is to make sure it can be indexed. That’s right — just because your site can be discovered and crawled by a search engine doesn’t necessarily mean that it will be stored in their index. In the previous section on crawling, we discussed how search engines discover your web pages. The index is where your discovered pages are stored. After a crawler finds a page, the search engine renders it just like a browser would. In the process of doing so, the search engine analyzes that page's contents. All of that information is stored in its index.

Read on to learn about how indexing works and how you can make sure your site makes it into this all-important database.

Can I see how a Googlebot crawler sees my pages?

Yes, the cached version of your page will reflect a snapshot of the last time googlebot crawled it.

Google crawls and caches web pages at different frequencies. More established, well-known sites that post frequently like https://www.nytimes.com will be crawled more frequently than the much-less-famous website for Roger the Mozbot’s side hustle, http://www.rogerlovescupcakes.com (if only it were real…)

You can view what your cached version of a page looks like by clicking the drop-down arrow next to the URL in the SERP and choosing "Cached":